On Thursday this week, OpenAI unveiled GPT-5, a unified system that automatically decides whether to deliver a quick response or engage in deeper, more deliberate reasoning. CEO Sam Altman described the shift as moving from “talking to a college student” with GPT-4 to “talking to a PhD-level expert” with GPT-5.

This release also cleans up what Altman called “a very confusing mess” of earlier models, including the o-series reasoning variants and GPT-4.5. The new automatic router completely eliminates the burden of model selection from users.

So, what can AI startups learn from OpenAI’s product releases and technology roadmap?

OpenAI Today

As of May 2025, OpenAI employs more than 4,400 people, an order of magnitude larger than almost any startup.

Engineering: 1,200–1,480 employees (33–56% of total staff)

Research & Technical Development: 1,400+ employees (~32% of staff)

These two groups form the core of OpenAI’s operation. The scale is far beyond that of a typical AI startup, yet its approach to launching, iterating, and consolidating products offers lessons that apply at any size.

Lessons for AI Startups

Iterate in public – From GPT-2’s cautious, partial release to ChatGPT’s viral breakout, OpenAI has relied on user feedback to shape development. Each release has informed the next.

Simplify the user experience – The journey from the original GPT in 2018 to today’s GPT-5 underscores the value of reducing cognitive load. Fewer choices, clearer options, and easier onboarding often matter as much as technical capability.

Consolidate when the time is right – GPT-5 shows that when multiple specialized tools exist, the next leap forward can come from combining them into a single, smarter system rather than adding more complexity.

Release with consistency, reliability, and real value – Frequent releases alone aren’t enough. OpenAI’s success shows that each one must be dependable and deliver meaningful improvements that users notice.

A Brief History of OpenAI’s GPT Releases

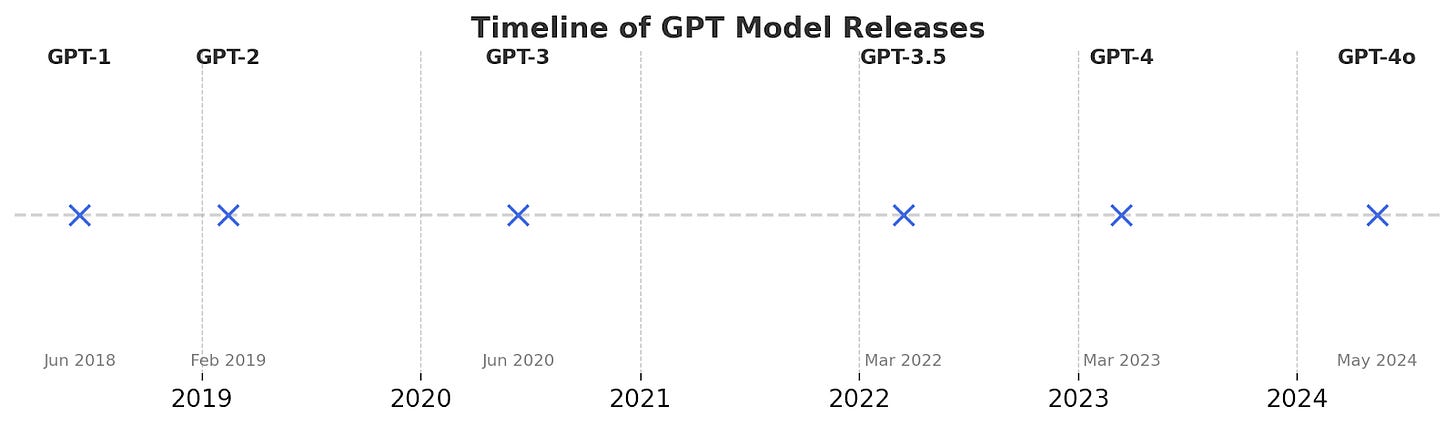

Since its debut in June 2018, GPT has evolved from the original Generative Pretrained Transformer—trained on BookCorpus (a dataset of free, unpublished books), capable of strong translation and Q&A without task-specific training but limited in long-form coherence—to increasingly powerful and versatile models. GPT-2 (Feb 2019) scaled to 1.5B parameters with improved coherence and creative abilities, trained on WebText (an OpenAI custom dataset of high-quality web pages), and was initially withheld over misuse concerns before full release. GPT-3 (June 2020) leapt to 175B parameters, excelling in few- and zero-shot learning for chatbots, coding, and content creation. InstructGPT (Jan 2022) refined GPT-3 using reinforcement learning from human feedback for safer, higher-quality instruction-following. ChatGPT (Nov 2022), based on GPT-3.5, was optimized for conversation, fueling mass adoption for Q&A, tutoring, and creative tasks. GPT-4 (Mar 2023) brought more reliability, nuance, and stronger reasoning, while GPT-4 Turbo (Nov 2023) offered faster, cheaper performance with a 128k-token context window, multimodal input, and advanced analytics. The latest, GPT-4o (“omni,” May 2024), is fully multimodal, processing text, audio, and images in real time for fluid voice conversations, rapid reasoning, and seamless cross-modal understanding.

The timeline for GPT releases looks like this:

Conclusion

OpenAI’s path from GPT to GPT-5 demonstrates that sustained impact stems from pairing technical breakthroughs with a disciplined approach to product delivery, iterating publicly, simplifying the user experience, consolidating when necessary, and ensuring each release delivers tangible value. For startups, the takeaway is clear: growth isn’t just about building powerful technology, but about releasing it in ways that are consistent, user-centric, and strategically timed.